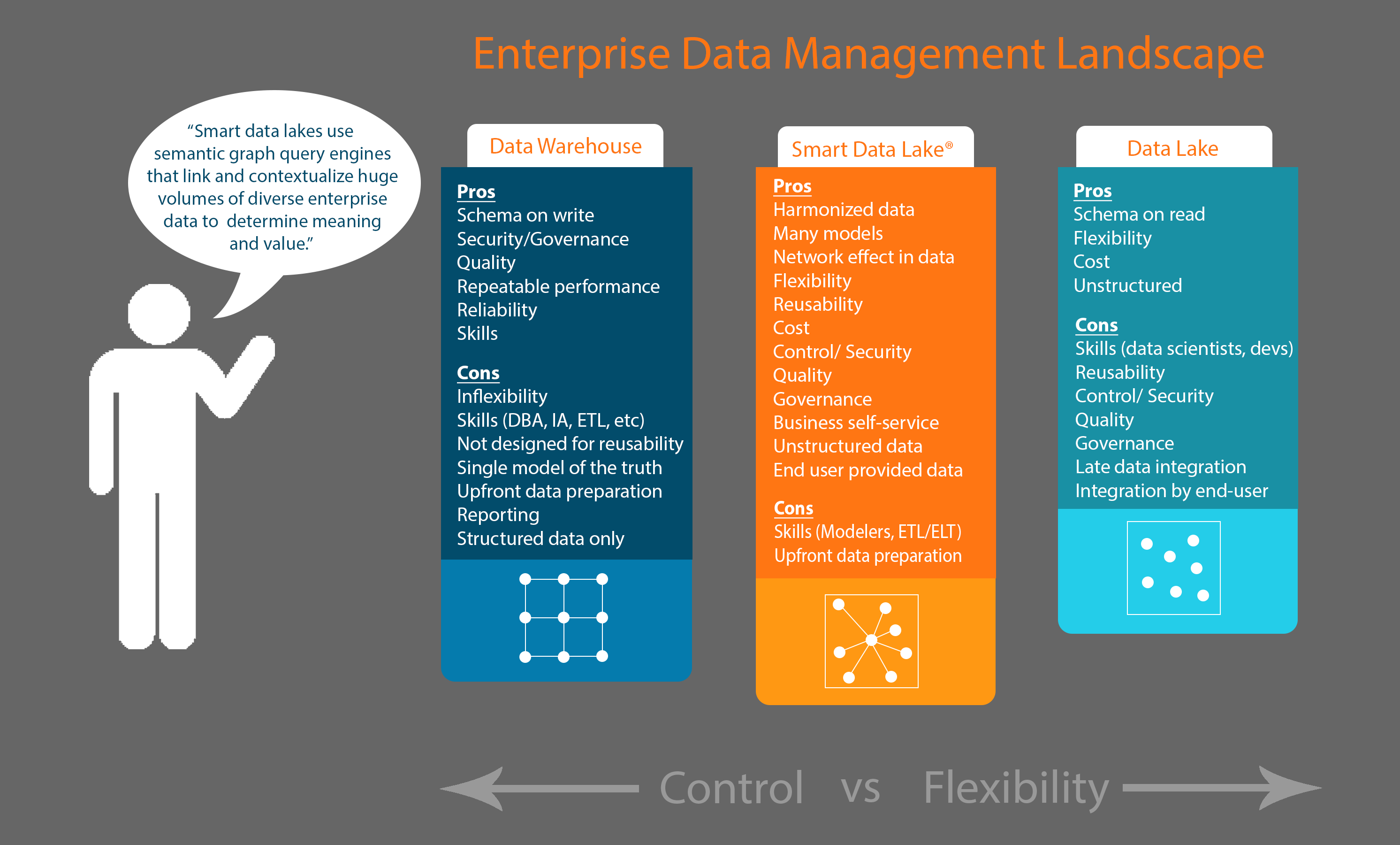

Picking up where we left off in my previous post, companies have been looking to data lakes in the past few years to relieve them of the expensive and time-consuming burden of creating data warehouses. However, as noted in the chart below, both present their own unique challenges and are imperfect solutions at best for effective enterprise data management, discovery and analysis.

The first-generation data lakes didn’t offer the safeguards of data governance and necessary controls used to establish a data warehouse. While business analysts, or more likely “data scientists” and “big data programmers”, got quick access to the latest enterprise data, the nature of the data lake made the data difficult to work with. You often had no idea where that data came from or what it represented as it lacked context. In addition, the big data tools required to query the data and extract value are complex and often require extensive, difficult to hire, skills sets.

Enter the era of the semantic-based or ‘smart’ data lake.

Smart data lakes® take advantage of semantic graphs that solve the problem of attaching context and meaning to huge volumes of diverse enterprise data. These semantic graphs leverage ontology models that denote just what the data means from the point of view of the business, regardless of source, structure, type or schema. The rich ontology models enable the practical creation and maintenance of much more accurate representations of the underlying realities that data represents, including complex interrelationships and dependencies. The result is that much wider, more complex questions referencing far more entity types at once can be asked of the data far more quickly than the traditional BI toolchain (ETL, data warehouses, data marts and end user ad hoc BI tools) practically allows.

Providing a highly reusable, “harmonized” data representation, the graph models provide users with the information needed for self-service data discovery, analytics and visualization capability across all entities and relationships in the data lake. This eliminates the need for the extensive preparation required of data warehouses as well as the specialist data wrangling skills needed for effective analysis in first-generation data lakes. And while there is still some upfront preparation required by IT personnel for ingesting data into smart data lakes and harmonizing it to an ontology, it is far less compared to the scale of work required for data warehouses and achieves a far more flexible, repurposable result.

Semantic technologies are also used to maintain the metadata necessary for governance and security policies for long-term sustainability of data lakes. Organizations can implement access to data in accordance with governance protocols and workflows by specifying who can and cannot view data elements. So even though the data is gathered in one place, the restrictions and permissions to their use are as enforceable as if the data were siloed according to governance mandates of the individual applications from which it originated. This model-driven governance and access control empowers users to ask complex questions that span the breadth of their data (i.e. many data sets simultaneously) while retaining trust that the answers stem from high-quality, secure data.

Interest in smart data lakes for managing big data deployments will continue to increase as organizations realize that they can gain all of the benefits of data warehouses and first-generation data lakes while neutralizing their drawbacks.

To learn more about smart data lakes, watch our webinar "How to Build a Smart Data Lake" here.